The Attention Span of a Protein Designer

Most of what passes for protein design these days is better described as substitution.

You take a known backbone, swap in plausible residues based on energy or evolutionary alignment, and run it through simulation until the numbers settle into something stable. It works, in a way, but it’s more mechanical than intelligent.

Design, real design, implies something else. Some sense of local awareness. Some ability to understand why a particular residue fits where it does.

That’s where a team from Beijing Normal University and the State Key Laboratory of Multiphase Complex Systems comes in, led by lead author Shuaibing Ling.

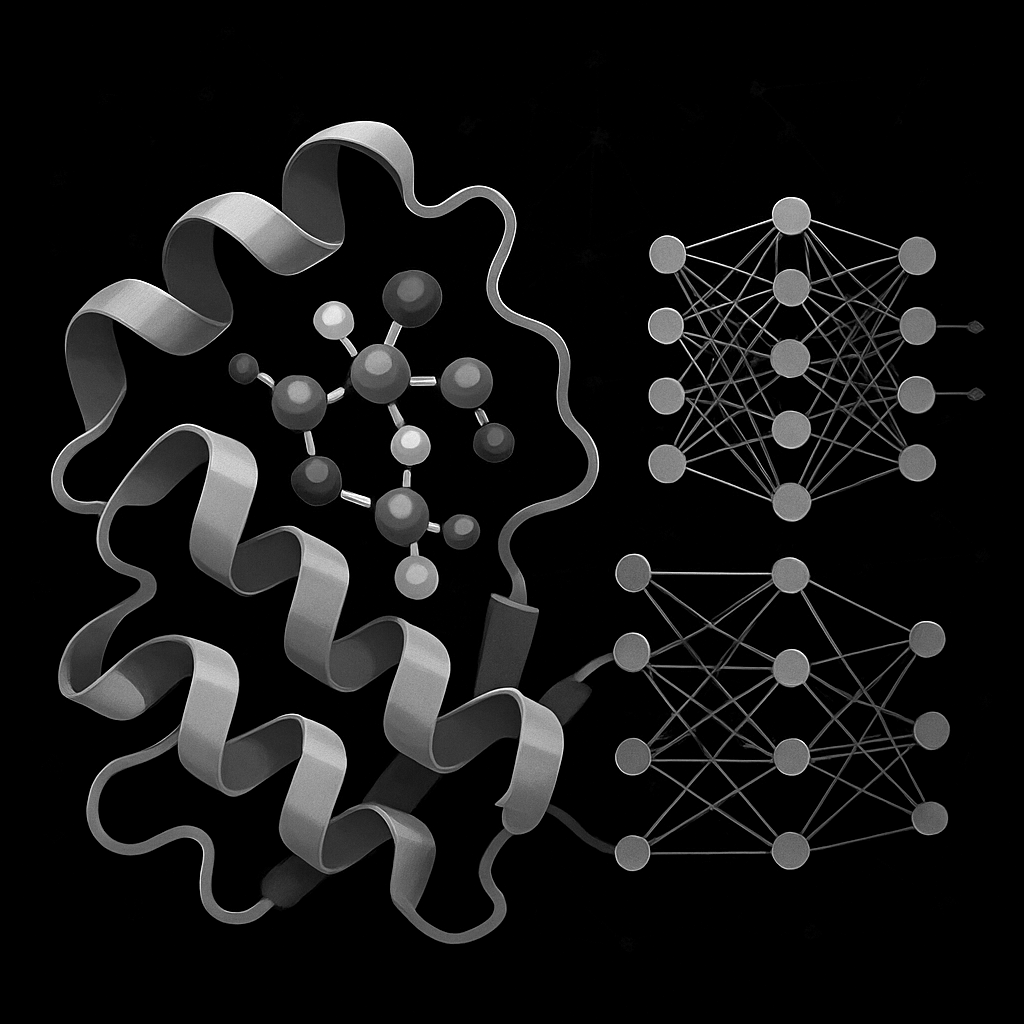

In their 2025 paper published in the Journal of Chemical Information and Modeling, they offer up EMOCPD, an attention-based model for computational protein design that doesn’t just guess, it listens. Not globally, but locally. It looks at each residue in the context of its surrounding structural environment and decides, probabilistically but with purpose, what amino acid belongs in that slot.

EMOCPD, which stands for Efficient Model of Contextual Protein Design, borrows its brainpower from the world of language models. But instead of treating a protein like a sentence strung from left to right, it interprets it as a spatial network of influence.

Every side chain and backbone atom has something to say, but the model learns which ones deserve to be heard.

This is what attention means here: weighting. Emphasis. Filtering signal from noise based on context, not rules.

Where this gets interesting is in the performance. According to the authors, EMOCPD outperforms existing methods, particularly in proteins that are low in negatively charged residues. That specificity is not a flaw, but a window into what the model is actually doing. Negative charges introduce complexity. They tug at the molecular fabric in ways that can confound simpler local-context models. But when that chaos is reduced, EMOCPD thrives.

It finds the patterns others miss because it’s not looking to brute-force an answer—it’s looking to understand its neighborhood.

This shift from global to local is not new in concept, but it’s rarely executed with this kind of architectural elegance. EMOCPD doesn’t simulate the whole protein in a physics engine. It doesn’t sample fragments and stitch them into Frankenstein monsters. It operates more like a surgical editor—focusing in, attending to the immediate surroundings, and filling in the blank with something it knows will fit.

The authors don’t pretend it’s a universal tool.

Acidic proteins still challenge the model. And without a deeper look at the full text, we can’t say how it performs on edge cases, large folds, or exotic scaffolds.

But the architecture is promising. It suggests that design can move beyond guesswork and closer to inference. It hints at a future where AI is not just predicting sequences but understanding the constraints that make those sequences valid.

There’s a quiet kind of intelligence in EMOCPD. One that doesn’t seek control over the whole molecule, but instead learns to pay attention.

And in protein design, attention is everything. Fold follows form, and form, it turns out, is local.